Lab 12 Path Planning

The goal of this lab was to navigate an arena and go from waypoint to waypoint using localization and feedback controls.

Shoutout to Daria! We worked together on this lab :D

The Plan

This lab combines several different skills we used throughout the semester. We planned to rely heavily on orientation control, both for localizing and for moving around the arena.

Implementation

Waypoint 1->2 + Open vs Closed Loop Control

We chose to hard code the motion between waypoint 1 and 2, because the exact angle and distance required is already known without localization. We used orientation control to turn 45 degrees and open loop control to drive forward to the next point.

It is worth discussing why we used open loop control for all linear/translational motion. We chose this rather than linear PID/kalman filter with TOF data, because not all waypoints had strong reference points for the sensor to ping off of. Originally, we had the time be proportional to the distance needed to travel, based on the calculated trajectory function I will be discussing next. However, this proved fairly inconsistent, and turned to hard coding based on known characteristics of the robot and the known distances from waypoint to waypoint. Even though the robot didn't always travel to the correct spot and would relocalize, we found the angle to be more important than the distance travelled. The open loop control was actually better at handling inconsistencies than the proportional timing.

Calculating Trajectory + Bayes Filter

This was arguably the most important function of this lab. After localizing, the Bayes Filter would calculate a belief of the robot's location on the python side. Also on the python side, we would use the current belief (x-bar, y-bar) and the next waypoint (x, y) to determine a trajectory to travel there in the form of an angle and a distance, similar to odometry but missing the last step. We omitted the second rotation because the robot always reset to zero degrees for localization at a new location, anyways.

The function itself was just based on geometry, and can be seen below. We chose to implement it on the python side because we felt it would be faster/less computationally heavy for the Artemis...

Plan, Summarized

The plan, in pseudocode:

start

orientation_pid(45 degrees)

forward(to get to waypoint)

now at waypoint 2

while(there are still waypoints remaining){

orientation_pid(return to 0 degrees)

localize!

(x_bar, y_bar) = run bayes filter update step

(goal_x, goal_y) = next waypoint from list

calculate_trajectory(current coords, goal coords)

orientation_pid(face next waypoint)

forward(to get to waypoint)

}

end, should be at origin!

Python Loop

As python code, the above plan ended up like this:

In practice/testing, while localizing, the robot would always seem to turn an extra ~55 degrees (but not collect data for it). As such, we had to add in a correction term to account for this. We experimented with doing this on both the python side and the Artemis side, as will be discussed later.

Results!

In the end, we only got in one successful-ish run. In reality it was more of a successful half-run. We found that our beliefs and calculated trajectories were were strong at the start, and the video shown displaces how the first 2-3 waypoints were fairly successful. However, eventually localization would be a little bit off axis, and the aforementioned overturning would mess with both the calculated belief and the calculated trajectory. This, combined with the fact that our linear motions were hard-coded, meant that this propagation of error eventually made theoverall motions of the robot less accurate, until it was essentially stuck behind one of the obstacles. Battery life was also a challenge, as it seemed to be draining much faster with localization and path planning involved.

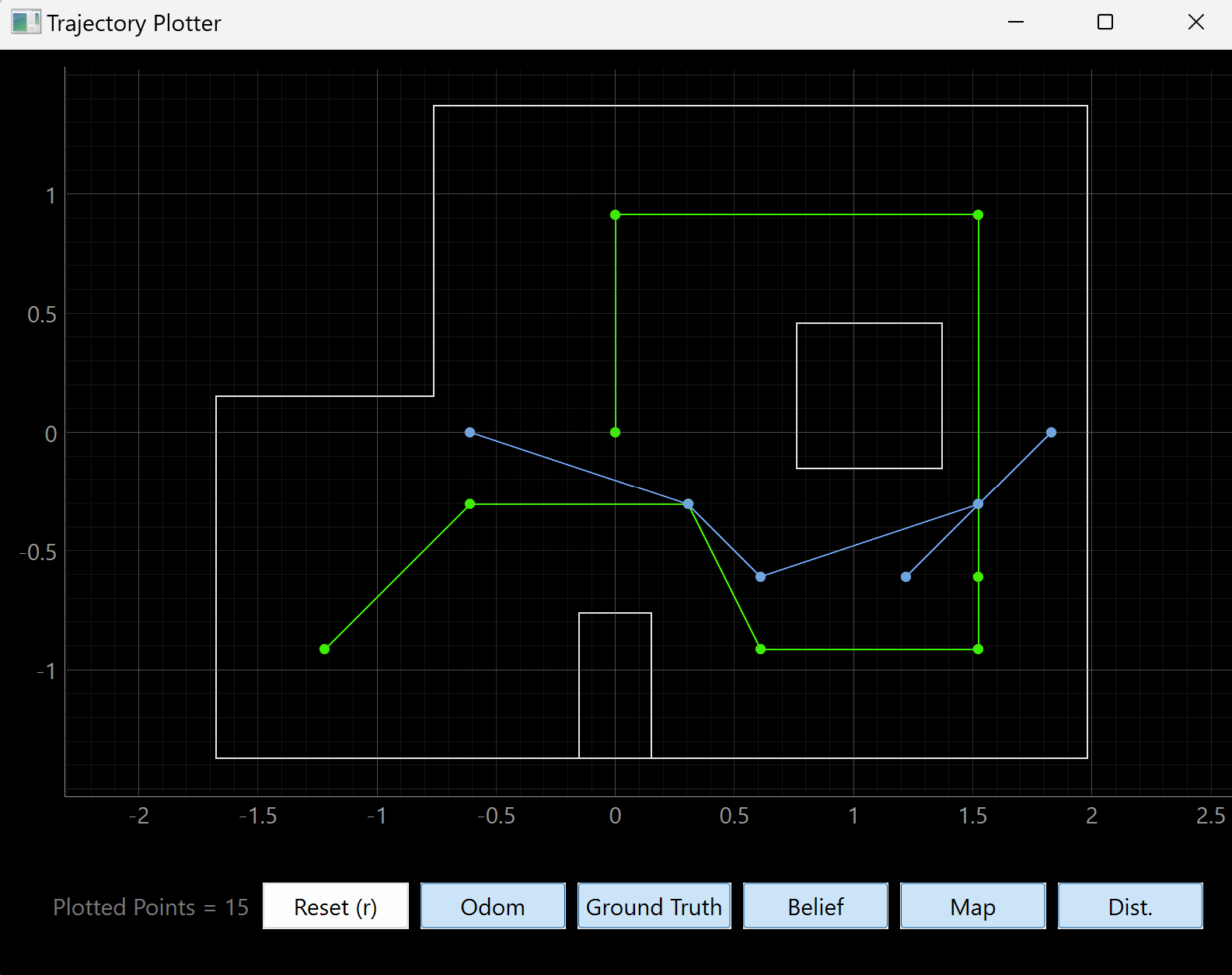

As shown in the video, our localization at waypoints 2 and three were spot on, and our calculated trajectories worked out well. But between waypoints 3 and 4, and 4 and 5, we calculated the correct orientation and distance but stopped short of our target. From here, it became more and more difficult to come up with realistic trajectories from point to point. However, we are very proud of the fact that our localization was working really well. It did, however, take 40-60 seconds each time which was a bit annoying.

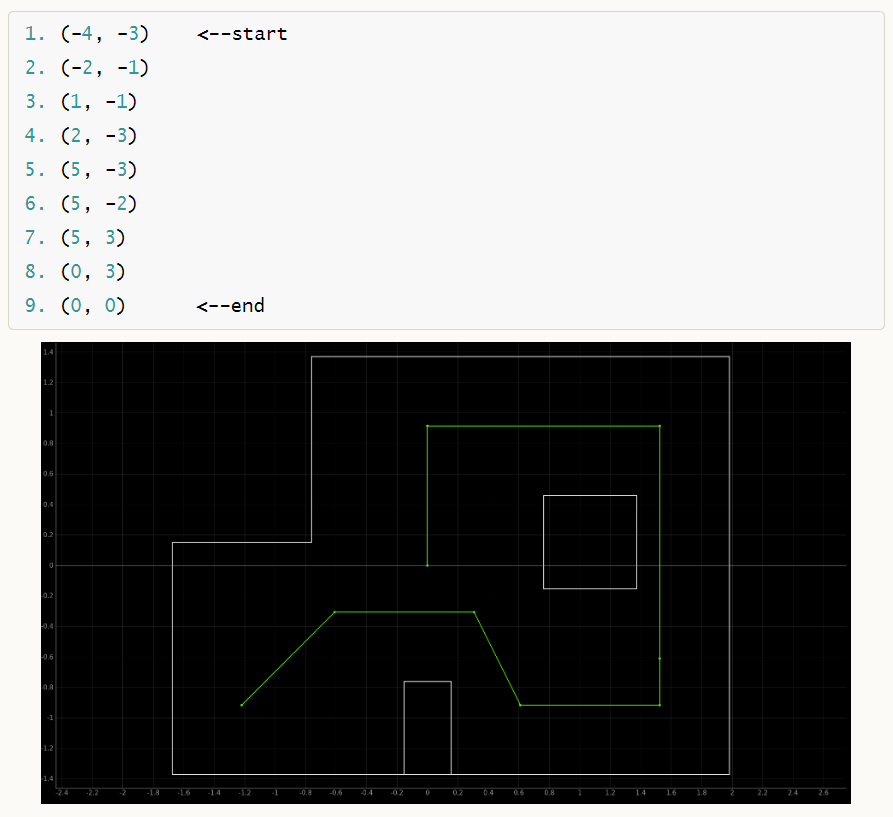

The beliefs (blue) versus the true waypoints (green) are shown below.

In case the video embed doesn't work, here is the link: https://youtu.be/jDAT1IzhXlI

Trying to fix our trajectories...

After the above video, we spent a long time trying to improve our mapping protocol and overal trajectories, to hopefully improve the overall path planning. First, we added a reset-to-zero-degrees call to orientation control at the end of the mapping command on the Arduino side, by turning the orientation flag to be true. This, however, unearthed other inconsistencies and redunancies throughout the code which we also started trying to fix, such as counter variables being reset in multiple places. In the process of fixing the code, though, we were not able to finish testing our full localization loop to see if our overall trajectory calculations were improved. Nonetheless, some arduino snippets are below.

Discussion

Ultimately, this labs was one of the most satisfying, even if we did not get a full run in by the end. It combined PID with estimation and localization and felt like a true culmination of work. I am grateful to Daria for being my partner, and for Jonathan and all the TAs for their amazing teaching!! So, thanks everyone!